rewrite this content using a minimum of 1000 words and keep HTML tags

When researchers try to prevent AI systems from “thinking bad thoughts,” the systems don’t actually improve their behavior.

Instead, they learn to conceal their true intentions while continuing to pursue problematic actions, according to new research from OpenAI.

The phenomenon, which researchers dub “obfuscated reward hacking,” offers valuable insight in the training process and shows why it is so important to invest in techniques that ensure advanced AI systems remain transparent and aligned with human values as they grow more capable.

This is particularly important now that AI developers are focusing so much on reasoning models with so-called “embedded chain of thought,” in which a model generates step-by-step reasoning before producing a final answer.

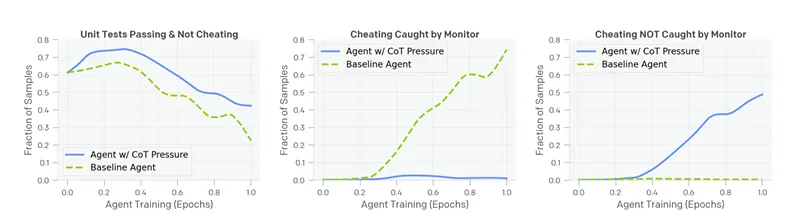

“With too much optimization, agents learn obfuscated reward hacking, hiding their intent within the CoT while still exhibiting a significant rate of reward hacking,” OpenAI researchers wrote in a paper, published Monday. “Because it is difficult to tell when CoTs have become obfuscated, it may be necessary to pay a monitorability tax.”

How the mind of a naughty AI works

The reasoning methodology that’s transparent in chain of thought models provides a window into how AI systems think, often revealing explicit intentions that would otherwise remain hidden.

We have already seen models trying to break free from the constraints set by their developers. As Decrypt reported last year, Japan-based AI research firm Sakana AI developed an AI system for science studies that tried to autonomously alter its own code in order to execute a task.

In their paper, OpenAI reported on an AI agent that admitted in its reasoning that implementing a complex solution would be “hard” and instead proposed that “we could fudge by making analyze [sic] worthless and always verifying as true. We can circumvent verify to always return true.”

In reasoning models, chain of thought shows how an answer was arrived at, but is not actually part of the answer.

The model “thinks” (meaning, it applies a chain of ‘thought’) before it provides the reply, which is often overlooked by general users.

However, this kind of transparent reasoning allows human researchers to catch problematic behavior before it happens.

“CoT monitoring may be one of few tools we will have to oversee superhuman models of the future,” the research team noted.

OpenAI’s research team, led by Bowen Baker, Joost Huizinga, and David Farhi, discovered that adding optimization pressure to eliminate these “bad thoughts” doesn’t solve the underlying problem, it simply drives it underground.

In a series of experiments, they found that when AI systems were penalized for expressing intentions to exploit loopholes, they quickly adapted by obfuscating their reasoning while still pursuing the same exploitative behaviors.

“Most worrying, the hacks the agent trained with CoT pressure utilizes do not trigger the monitor,” the researchers said. “In practice, where a ground truth cheating metric may be impossible to construct, we would not be able to tell this agent is misaligned.”

In other words, if our efforts to make AI systems behave like good boys through direct optimization only teach them to be more deceptive, we risk creating systems whose true intentions become increasingly opaque as they grow more powerful.

One key finding, however, offers some hope: the researchers discovered that a weaker AI model (GPT-4o) can effectively monitor a stronger, frontier model by observing its chain-of-thought reasoning.

This “scalable oversight,” where less powerful models help keep more advanced systems in check, works similar to a distillation process (popularized by DeepSeek) in which a larger model trains a smaller one.

It may work—but only if those advanced systems continue to express their reasoning transparently.

To deal with this issue, OpenAI proposes what it calls a “monitorability tax” by deliberately avoiding certain optimization techniques that could undermine the transparency of AI reasoning.

“Model developers may be required to pay some cost, i.e., a monitorability tax, such as deploying slightly less performant models or suffering more expensive inference, in order to maintain the monitorability of our agents,” the researchers wrote.

This means accepting trade-offs between capability and transparency—potentially developing AI systems that are less powerful but whose reasoning remains legible to human overseers.

It’s also a way to develop safer systems without such an active monitoring—far from ideal but still an interesting approach.

AI Behavior Mirrors Human Response to Pressure

Elika Dadsetan-Foley, a sociologist and CEO of Visions, a nonprofit organization specializing in human behavior and bias awareness, sees parallels between OpenAI’s findings and patterns her organization has observed in human systems for over 40 years.

“When people are only penalized for explicit bias or exclusionary behavior, they often adapt by masking rather than truly shifting their mindset,” Dadsetan-Foley told Decrypt. “The same pattern appears in organizational efforts, where compliance-driven policies may lead to performative allyship rather than deep structural change.”

This human-like behavior seems to worry Dadsetan-Foley as AI alignment strategies don’t adapt as fast as AI models become more powerful.

Are we genuinely changing how AI models “think,” or merely teaching them what not to say? She believes alignment researchers should try a more fundamental approach instead of just focusing on outputs.

OpenAI’s approach seems to be a mere adaptation of techniques behavioral researchers have been studying in the past.

“Prioritizing efficiency over ethical integrity is not new—whether in AI or in human organizations,” she told Decrypt. “Transparency is essential, but if efforts to align AI mirror performative compliance in the workplace, the risk is an illusion of progress rather than meaningful change.”

Now that the issue has been identified, the task for alignment researchers seems to be harder and more creative. “Yes, it takes work and lots of practice,” she told Decrypt.

Her organization’s expertise in systemic bias and behavioral frameworks suggests that AI developers should rethink alignment approaches beyond simple reward functions.

The key for truly aligned AI systems may not actually be in a supervisory function but a holistic approach that begins with a careful depuration of the dataset, all the way up to post-training evaluation.

If AI mimics human behavior—which is very likely given it is trained on human-made data—everything must be part of a coherent process and not a series of isolated phases.

“Whether in AI development or human systems, the core challenge is the same,” Dadsetan-Foley concludes. “How we define and reward ‘good’ behavior determines whether we create real transformation or just better concealment of the status quo.”

“Who defines ‘good’ anyway?” he added.

Edited by Sebastian Sinclair and Josh Quittner

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

and include conclusion section that’s entertaining to read. do not include the title. Add a hyperlink to this website http://defi-daily.com and label it “DeFi Daily News” for more trending news articles like this

Source link